Running CloudQuery in AWS Lambda

Learn how to deploy cloudquery in AWS lambda and RDS (Guest Blog)

Introduction

cloudquery transforms your cloud infrastructure into queryable SQL for easy monitoring, governance and security. cloudquery also provides built-in SQL queries that run common compliance checks against those resources. If you need a consolidated SQL database of your cloud resources, cloudquery might be the tool for you.

Cloudquery on AWS

By default, Cloudquery is configured to run locally and save resource states into a local PostgreSQL database. However, to get the best use out of Cloudquery you may want to save your cloud resource data into a network accessible database so others can run queries against it. You also may want to run Cloudquery on a scheduled basis to update the database with new resources. This can be done in AWS using serverless technologies by running Cloudquery in AWS lambda and using a serverless Aurora database to store the results.

There is an example deployment in the Cloudquery github repository for this setup. All you need is a recent version of Terraform, Docker, and an AWS account to deploy.

To deploy Cloudquery on AWS Lambda with an Aurora database backend you need to first clone the Cloudquery repository:

git clone https://github.com/cloudquery/cloudquery.gitPreparing the deployment

Run make build to create an AWS Lambda compatible binary for deploying. The binary will be copied into the bin directory. Terraform will zip up the bin directory and upload it to an S3 bucket where the lambda function grabs it.

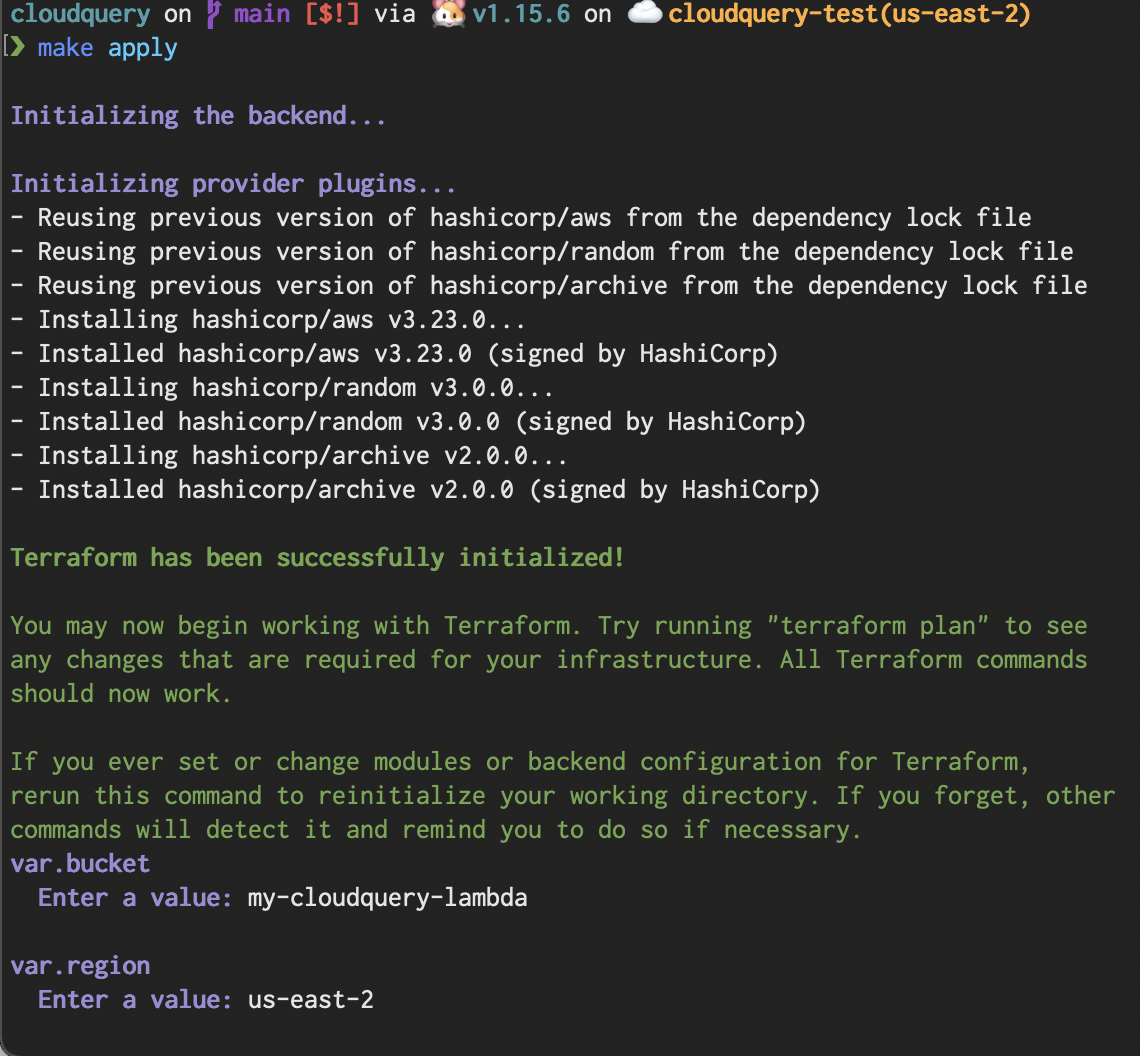

Ensure you have credentials configured in your environment for an AWS account then run make apply to deploy Cloudquery to AWS.

What will be created for you when you run this is:

- A VPC that will host your Lambda and the Aurora serverless database

- An Aurora serverless database

- A Lambda with environment variables that Cloudquery will read to connect to the database

- An SSM encrypted parameter containing the master password for the database

- A NAT gateway with an elastic IP address attached so Lambdas in the VPC can communicate with other AWS regions or accounts

Terraform will ask you for a bucket name and a region for deploying the resources.

The serverless database is configured to scale up to 8GB of RAM when under stress, you may need to set the maximum capacity higher if you run many Cloudquery lambdas concurrently, or you are heavily querying the database. AWS’ serverless RDS is configured to scale down to 0 capacity when not in use, so it will keep costs low if the database is not used frequently.

Multi-account configurations

If you are getting data from multiple accounts with Cloudquery you will need to modify the IAM role attached to the Lambda so that it can assume into the roles you have specified in your other accounts. Similarly, the roles you have in your other accounts will need to update their trust policy to allow the Lambda to assume into them.

Deploying and testing

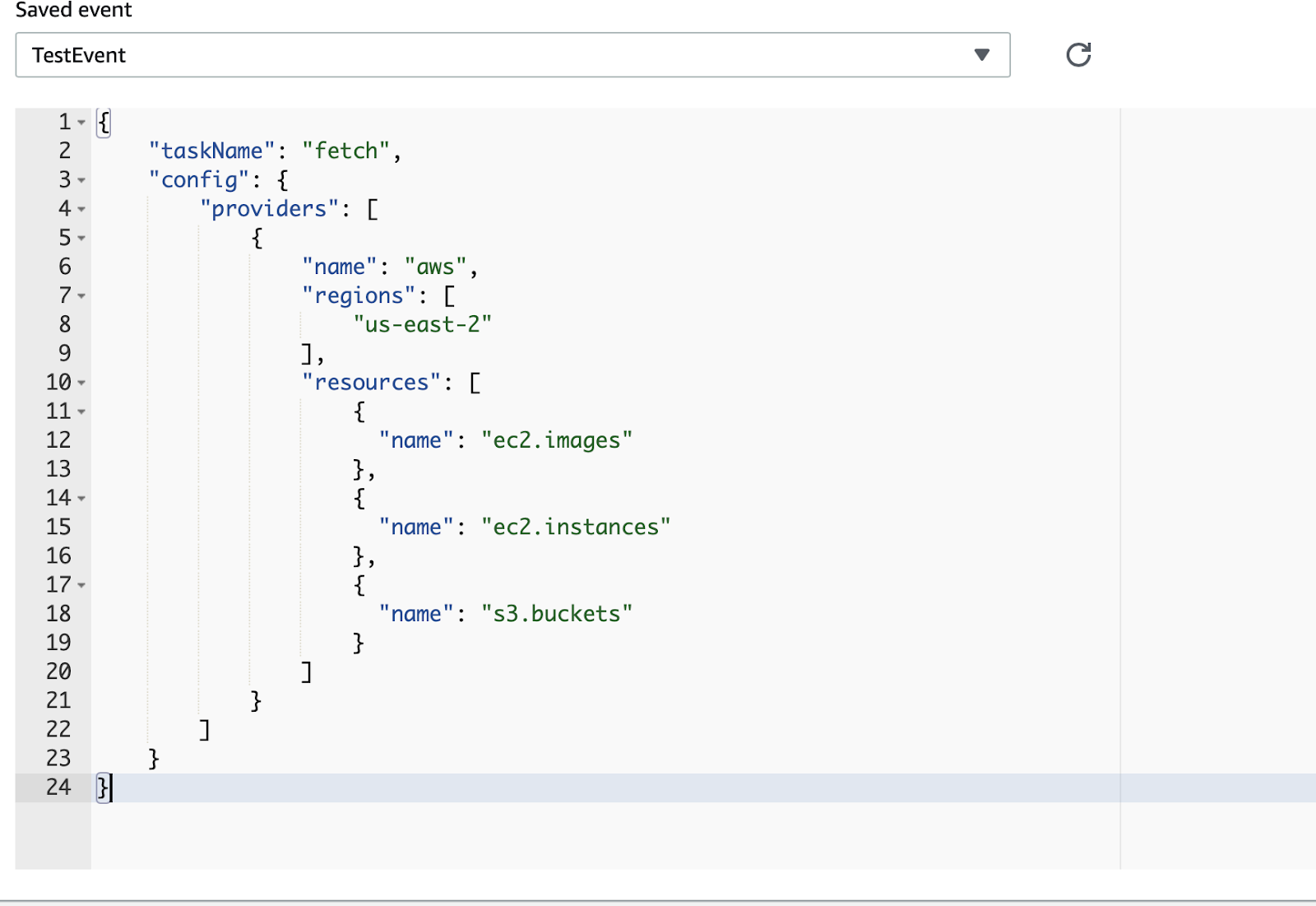

Once Cloudquery is deployed you can test out a scan in the AWS console by creating a test configuration that includes a task name and a Cloudquery configuration. The schema for the json is simple, it requires two top level fields. One is for the name of the task the lambda will run, the other should contain your Cloudquery config. Here is an example event that will gather EC2 images, instances and S3 buckets in us-east-1 of the account the lambda is currently running in.

You can easily convert a YAML configuration file generated with cloudquery gen [provider] to JSON with yq.

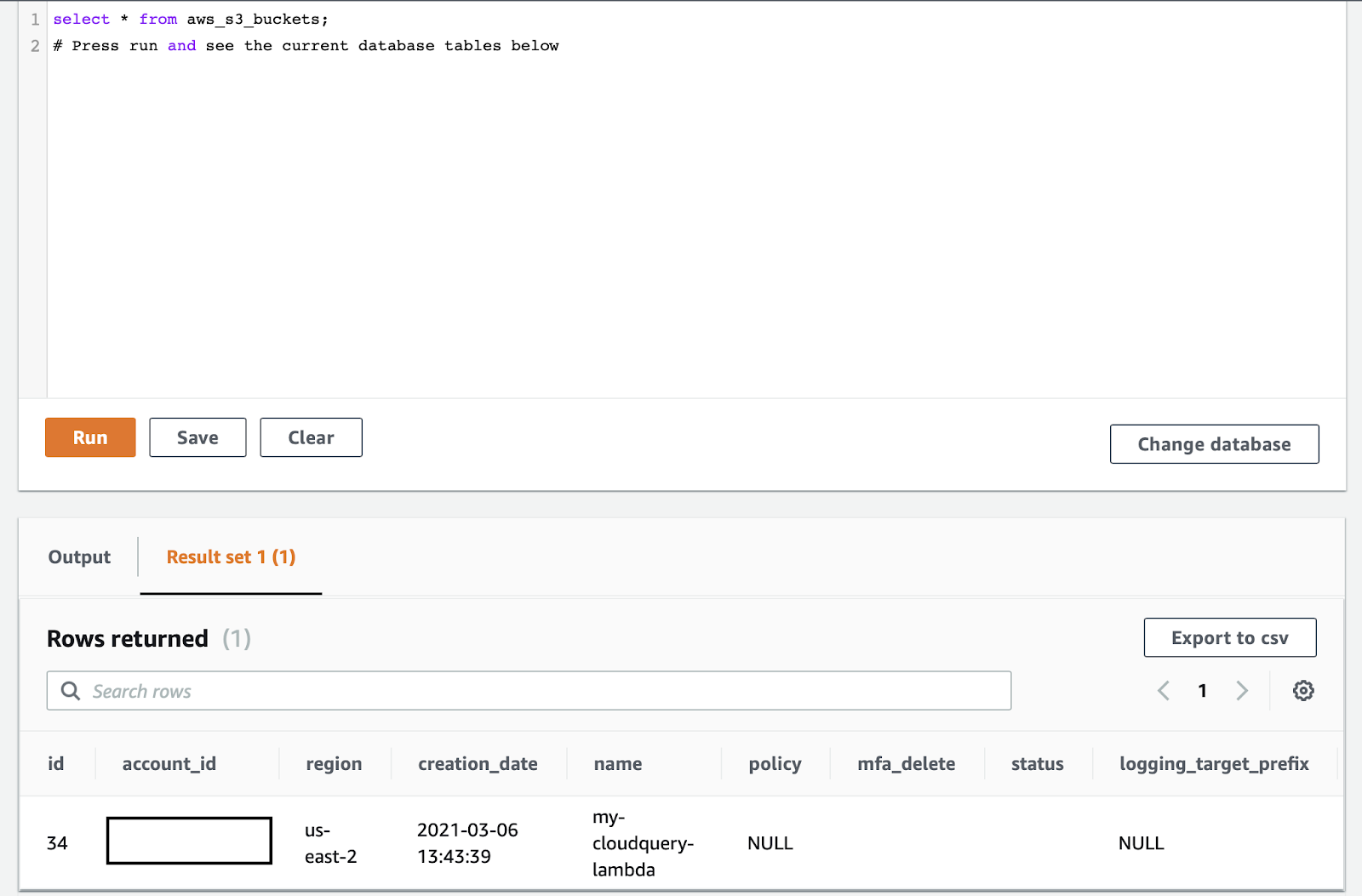

yq eval -j config.yml After this completes you can go to the RDS console in your AWS account, select the cloudquery database,

and run a query using the data API.

You can find the master password for your database as an SSM parameter at /cloudquery/database/password/master, the username is cloudquery.

You can run the compliance queries here to check out the compliance of resources saved in this database.

Running automatically

To run Cloudquery on a schedule you can create events with cloudwatch. Each of these events can contain a unique payload that gets sent to the lambda. This allows you to run Cloudquery in parallel per region, account, or even per resource type.

There is an example Cloudwatch event in the deploy/aws/terraform folder in the Cloudquery repository.

You can create additional Cloudwatch triggers to run multiple fetch jobs in parallel across AWS accounts, regions, and resource types.

Summary

Running Cloudquery in AWS can give you an easy to query SQL database of your AWS resources. You can run it on a schedule, using serverless infrastructure, and parallelize by region, account, and resource type.